Prof Mu has led a successful Research Capital Fund at UON to help the university invest in key areas that can extend its research and innovation impact leading to the next REF submission.

The Fund will support the first-phase development of a Metaverse Lab for health services, education, training, and industrial innovations within but not limited to the Faculty of Arts, Science and Technology (FAST), Centre for Advanced and Smart Technologies (CAST), and Centre for Active Digital Education (CADE).

The Metaverse Lab will address the single biggest challenge of VR/XR work at the university: many colleagues who want to experiment with immersive technologies for teaching and research simply didn’t have the resources and technical skills to set up the technology for their work. We’ve witnessed how this technical barrier has blocked so many great ideas from being further developed. My aim is to build an environment where researchers can simply walk into the Lab and start experimenting with the technologies, conducting user experiments, and collecting research-grade data.

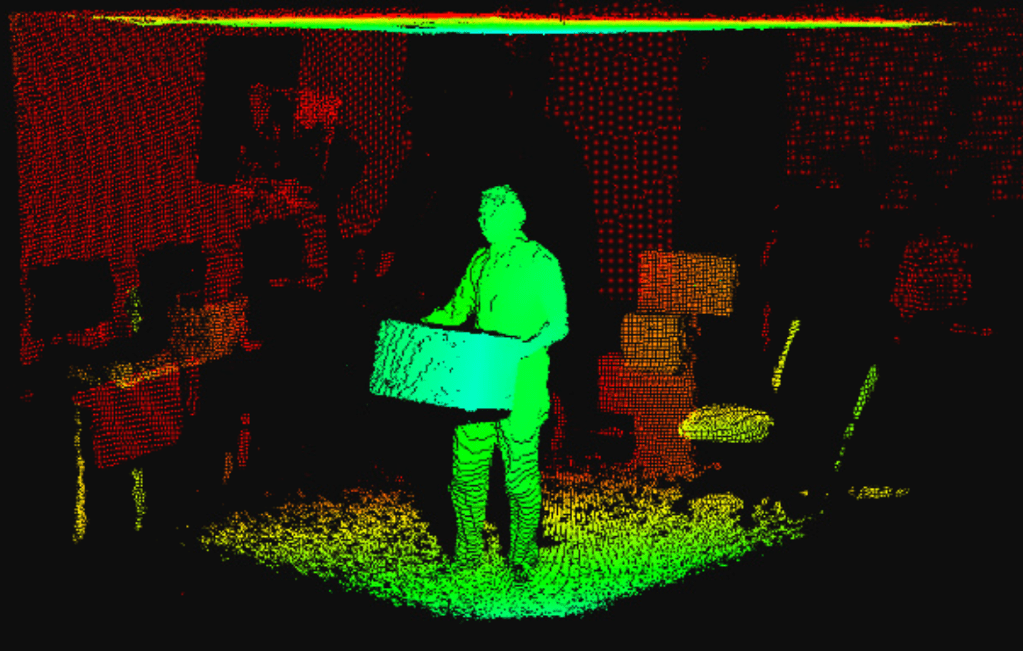

The two screen recordings below show the 3D volumetric capturing of human subjects using 4 calibrated cameras. This particular demo was developed based on cwipc – CWI Point Clouds software suite. The cameras are diagonally placed to cover all viewing angles of the subjects. This means that you can change your view by moving around the subject. The cameras complement each other while the view from one camera is obstructed. One of the main advantages of such live capturing systems is its flexibility. No objects need to be scanned in advance and you can simply walk into the recording area and bring any object with you.

The Lab will include an end-to-end solution, from content generation to distribution and consumption. At the centre of the Metaverse Lab sits an audio-visual volumetric capturing system with several RGB-depth cameras and microphones. This will allow us to seamlessly link virtual and physical environment for complex interactive tasks. The capturing system will link up with our content processing and network emulation toolkit to prepare the raw data for different use scenarios such as online multiparty interaction. Needless to say, artificial intelligence will be an important part of the system for optimisation and data-driven designs. There will be dedicated VR/XR headsets added to our arsenal to close the loop.

The system can be used for motion capturing using the Kinect’s Body Tracking SDK. With 32 tracked joints, human activities and social behaviour can be analysed. The following two demos show two scenes that I created based on live tracking of human activities. The first one shows two children playing. The blue child tickles the red child while the red child holds her arms together, turns her body and moves away. The second scene is an adult doing pull-ups. The triangle on the subject’s face marks their eyes and nose. The two isolated marker points near the eyes are the ears.

We envisage multiple impact areas including computational psychiatry (VR health assessment and therapies), professional training (policing, nursing, engineering, etc.), arts and performance (UKRI just announced a new framework “Enter the metaverse: Investment into UK creative industries”), social science (e.g., ethical challenges in Metaverse), esports (video gaming industry), etc. We are also looking forward to expanding our external partnerships with industrial collaborations, business support, etc.

Leave a comment